Life Without Sun: Earth's Alien Hydrothermal Vent Worlds

TL;DR: Scientists have transformed ant trail formation into powerful optimization algorithms now used in Amazon warehouses, internet routing, and autonomous vehicles. These bio-inspired systems solve complex problems through simple rules and collective behavior, often outperforming traditional methods.

Every day, billions of packages move across the planet, finding their way through warehouses the size of small cities. Internet data packets zip through networks at light speed, taking optimal routes you'll never see. Delivery trucks navigate cities with mathematical precision. Behind these feats of modern logistics lies an unlikely genius: the humble ant. Scientists have translated the chemical trails these insects leave into algorithms that now power Amazon's warehouses, optimize telecommunication networks, and route autonomous vehicles. What began as curiosity about how ants always seem to find the shortest path has become one of the most powerful optimization techniques in computer science.

Ants are nearly blind, yet they build highways between their nests and food sources that rival our best-planned infrastructure. The secret lies in pheromones, chemical compounds that ants deposit as they walk. When a foraging ant discovers food, it returns home while laying down a trail of pheromones, essentially marking its route for others to follow. Here's where it gets interesting: shorter paths accumulate pheromones faster because more ants complete the journey in less time. Longer routes gradually fade as the chemicals evaporate.

This creates a feedback loop that mathematicians call stigmergy - indirect communication through environmental modifications. No ant understands the overall problem. No central planner coordinates the traffic. Each individual follows simple rules: follow strong pheromone trails when you find them, and strengthen the path you took when you succeed. The colony's collective intelligence emerges from thousands of tiny decisions, each one slightly influenced by the decisions that came before.

Scientists at the Université Libre de Bruxelles discovered this pattern in the 1980s while studying Argentine ants choosing between two paths to food. When both routes were equal length, ants used them equally. But when one path was shorter, the colony converged on it within minutes. The researchers realized they weren't watching instinct - they were watching an optimization algorithm written in chemistry.

What makes this remarkable is its robustness. If you suddenly block the optimal path, the pheromone trail evaporates and ants quickly establish a new route. The system adapts to change without any ant needing to "know" what happened. This resilience would later become one of the most valuable features of ant-inspired algorithms.

In 1992, computer scientist Marco Dorigo transformed this biological phenomenon into Ant Colony Optimization (ACO), and everything changed. His insight was elegant: what if we created virtual ants that explored solution spaces the same way real ants explore terrain?

ACO algorithms create artificial ants that explore solution graphs, depositing virtual pheromones on successful paths - exactly like their biological counterparts, but in digital space.

The mathematical translation is surprisingly straightforward. In ACO algorithms, artificial ants traverse graphs where nodes represent decision points and edges represent possible choices. Each ant constructs a solution by probabilistically choosing edges, with the probability weighted by artificial pheromone levels. When an ant completes its journey, it deposits pheromone proportional to solution quality - more for better solutions, less for worse ones.

The probability formula captures the magic: an ant choosing between paths considers both pheromone intensity (learned collective wisdom) and heuristic information (greedy short-term benefit). A parameter called alpha controls how much ants trust the collective memory, while beta determines how much they rely on obvious short-term gains. Pheromone evaporation ensures the system doesn't get stuck on suboptimal solutions that were merely discovered early.

This mirrors how real ants balance exploration and exploitation. Early in the process, when pheromone trails are weak, ants explore broadly. As good solutions emerge and their trails strengthen, the colony exploits what it's learned. But evaporation maintains just enough randomness that the system can escape local optima - those frustratingly decent solutions that aren't quite optimal but seem better than anything nearby.

What computer scientists immediately recognized was ACO's power for NP-hard problems - those notorious computational challenges where finding the perfect solution requires checking an impossibly large number of possibilities. The traveling salesman problem, a classic example, asks for the shortest route visiting multiple cities exactly once. As cities increase, possible routes explode exponentially. Checking every possibility quickly becomes computationally infeasible, but ACO finds excellent solutions without exhaustive search.

The transformation from theory to practice happened faster than anyone expected. By the early 2000s, companies were deploying ACO in real-world systems, and the results were startling.

Amazon's Kiva robots, which transformed warehouse automation, use swarm intelligence principles descended directly from ant colony research. These orange robots don't follow predetermined paths - they dynamically optimize routes through warehouses, adapting in real-time as orders change and obstacles appear. Each robot's successful path influences the routing probabilities for subsequent robots, creating emergent optimization patterns eerily similar to ant highways. The system handles thousands of simultaneous orders without central coordination, exactly like an ant colony manages thousands of foragers without a master plan.

Telecommunications companies discovered ACO could optimize network routing more effectively than traditional algorithms. When data packets travel across the internet, they face decisions at every router about which path to take next. Early routing algorithms used fixed rules or centralized calculations. ACO-based approaches treat data packets like ants, letting them deposit virtual pheromones on successful routes. This creates routing tables that automatically adapt to network congestion, equipment failures, and traffic patterns - without requiring constant human intervention or centralized recalculation.

"What began as curiosity about insect behavior has transformed into mission-critical infrastructure powering trillion-dollar industries."

- Marco Dorigo, Creator of ACO

The logistics revolution extends beyond warehouses. Ride-sharing companies use ACO-inspired algorithms to optimize driver routes and match riders with drivers. Delivery services apply similar principles to vehicle routing problems, where trucks must visit dozens of stops efficiently. Energy grid operators use swarm intelligence to balance load distribution across power networks.

Manufacturing saw perhaps the most dramatic impact. Production scheduling - determining the optimal sequence for manufacturing different products - is a fiendishly complex problem when you factor in machine availability, setup times, deadlines, and resource constraints. ACO algorithms now help factories schedule production lines, reducing downtime and improving efficiency by double-digit percentages in some implementations.

The next leap came when researchers realized they could apply ant principles to physical robot swarms. Harvard's Wyss Institute developed Kilobot swarms - collections of up to 1,024 simple robots that self-organize to form shapes and solve spatial problems using only local communication and simple rules.

These robots don't have detailed maps or GPS. Like ants, each robot knows only its immediate surroundings and follows rules about how to move relative to neighbors. Yet the swarm can assemble into complex patterns, explore unknown environments, and adapt when individual robots fail. The parallels to ant colonies are explicit in the design - researchers directly translated chemical gradient following into robot communication protocols.

The implications for disaster response are profound. Traditional rescue robots require sophisticated sensors, detailed maps, and often human control. Ant-inspired swarms could explore collapsed buildings or hazardous sites using dozens of cheap, expendable robots that self-coordinate. If some robots fail, the swarm adapts. If the environment changes, the swarm responds. The system's intelligence emerges from collective behavior rather than individual capability.

Agricultural robotics has embraced similar principles. Swarms of small robots can monitor crop health, identify pest problems, and even perform targeted interventions - essentially mimicking how ant colonies collectively manage their fungus gardens. Each robot contributes limited observations, but the collective builds comprehensive understanding.

Mining and space exploration companies are testing ant-inspired systems for environments too dangerous or remote for human oversight. A swarm of simple rovers exploring Mars could cover more ground and survive more failures than a single sophisticated robot. If communication with Earth is delayed or disrupted, the swarm continues making collective decisions based on local rules.

The success of ACO in so many domains raises an obvious question: what makes it better than the optimization methods we already had?

Traditional algorithms often require complete information upfront. They want to know all possible states, all possible actions, all constraints. ACO works with partial information and adapts as it learns. This makes it incredibly valuable for real-world problems where conditions change, information is incomplete, or the problem itself shifts over time.

ACO also handles multi-objective optimization naturally. Real logistics problems don't just minimize distance - they balance distance, time, fuel costs, delivery windows, traffic patterns, and vehicle capacity simultaneously. Traditional algorithms struggle with these trade-offs. ACO's probabilistic nature lets it explore different balances and converge on solutions that optimize across multiple criteria without requiring explicit weighting of objectives.

Perhaps most importantly, ACO scales elegantly. Adding more ants to the colony improves solution quality and exploration without fundamentally changing the algorithm. You can't simply "add more" to a traditional optimization algorithm the way you can increase ACO's ant population. This parallelizability maps perfectly to modern computing architectures with multiple processors.

ACO systems can run thousands of optimization agents in parallel, making them naturally suited for modern multi-core processors and distributed computing architectures.

Recent research has pushed these advantages even further. A 2024 paper on neural-enhanced ACO for the traveling salesman problem combined deep learning with traditional ACO, achieving performance improvements of 15-30% over standard approaches. The neural network learns patterns in which pheromone distributions lead to good solutions, essentially teaching the artificial ants to be smarter about where they explore.

Other researchers have applied ACO principles to machine learning itself. The ACO-ToT framework treats large language models as ants exploring spaces of reasoning paths, with pheromones marking successful logical steps. The system achieved 84.2% accuracy on mathematical reasoning tasks, dramatically outperforming traditional prompting methods. The ants had learned to optimize thought itself.

Understanding why ant-inspired algorithms work so well requires appreciating the biological sophistication they're modeling. Ant colonies aren't just random creatures stumbling into efficiency - they're the product of 100 million years of evolutionary optimization.

Trail pheromones are chemically complex. Different ant species use different compounds, and some use combinations that create distinct "dialects" preventing confusion when multiple species' territories overlap. The evaporation rate isn't accidental - it's tuned by evolution to match the scale of the ant's foraging range. Too slow and trails become permanent highways even to exhausted food sources. Too fast and ants can't effectively share information.

Recent research revealed that ants don't just follow the strongest trail blindly. They show what biologists call "informed decision-making" - they weigh trail strength against other factors like visual landmarks, memory of past success, and even apparent crowding on a path. Some ants intentionally take suboptimal routes to explore alternatives, maintaining the colony's ability to adapt to change.

The pheromone reinforcement mechanism resembles Hebbian learning in neural networks - connections that fire together wire together. Frequently traversed paths get stronger, just as frequently activated synapses become more efficient. This parallel isn't coincidental; both systems evolved to solve the fundamental problem of learning from experience without central coordination.

Even the ants' apparent randomness serves a purpose. Early mathematicians modeling ant behavior were puzzled by variations in individual ant decisions that seemed like noise. Later analysis showed this "noise" prevents the colony from prematurely converging on suboptimal solutions. Evolution built randomness into the algorithm because it improves long-term performance. Computer scientists designing ACO variants have learned to carefully tune this randomness - too little and the system gets stuck, too much and it fails to converge.

The cutting edge of ant-inspired computing lies in hybrid systems that combine multiple optimization approaches. Researchers are crossing ACO with genetic algorithms, simulated annealing, and neural networks, creating optimization techniques that leverage the strengths of multiple strategies.

A recent study on PID controller tuning for renewable energy systems compared pure ACO against genetic algorithms and hybrid approaches. The hybrid system outperformed either technique alone, using ACO for broad exploration and genetic algorithms for fine-tuning promising regions of solution space. These systems are now helping optimize wind farm operations and solar panel arrays, maximizing energy capture under varying conditions.

Reinforcement learning merged with swarm intelligence has created entirely new approaches to robot control. Instead of pre-programming robot behaviors, researchers train swarm controllers using reward signals, allowing the system to discover novel coordination strategies that human designers might never imagine. These learned swarm behaviors have solved problems like collective transport of large objects, adaptive formation control, and coordinated surveillance.

The latest frontier applies swarm principles to abstract optimization problems previously considered unsuitable for bio-inspired methods. Financial portfolio optimization, drug discovery molecular searches, and even urban planning are seeing experimental applications of ACO variants. A pharmaceutical company used ACO to search the enormous space of possible molecular modifications for a drug candidate, finding promising compounds that traditional computational chemistry approaches had missed.

Despite successes, ant-inspired algorithms face real limitations. ACO requires careful parameter tuning - alpha, beta, evaporation rate, ant population size all significantly affect performance. Set them wrong and the algorithm either converges too quickly on poor solutions or wanders indefinitely without finding good ones. Traditional optimization methods often have more predictable behavior.

Computational cost can be significant. Running thousands of virtual ants through many iterations requires substantial processing power. For small-scale problems, simpler algorithms often work better. ACO shines on complex, large-scale, dynamic problems, but using it for simpler tasks is overkill.

ACO doesn't guarantee optimal solutions - it finds excellent solutions with high probability, but proving optimality remains challenging for safety-critical applications.

The probabilistic nature that makes ACO flexible also means it doesn't guarantee optimal solutions. It finds excellent solutions with high probability, but you can't prove optimality the way you can with some traditional methods. For applications requiring provable correctness - safety-critical systems, legal compliance verification - this uncertainty is problematic.

Integration challenges persist in real-world deployment. Implementing ACO in existing logistics systems requires substantial software engineering. The algorithms must interface with legacy databases, respect business rules, and provide explanations for decisions that managers can understand. Pure ACO doesn't naturally produce human-interpretable reasoning about why it chose particular solutions.

The trajectory seems clear: ant-inspired algorithms will become more sophisticated, more widely deployed, and more deeply integrated into infrastructure we barely notice. Several trends are accelerating this transformation.

Hardware is catching up to algorithms. Neuromorphic chips - processors designed to mimic brain architecture - happen to be excellent at running swarm intelligence algorithms. These chips process many simple operations in parallel with minimal power consumption, exactly what ACO needs. As neuromorphic computing becomes mainstream, ACO and similar techniques will run faster and more efficiently.

The Internet of Things creates perfect conditions for swarm intelligence. Billions of connected devices, each with limited individual capability, making local decisions that affect collective outcomes - that's an ant colony at global scale. Smart cities will increasingly use ACO-inspired techniques to optimize traffic light timing, energy distribution, waste collection routes, and emergency response dispatch. The system will learn and adapt continuously without human reconfiguration.

Autonomous vehicle coordination represents perhaps the highest-stakes application. When self-driving cars communicate and coordinate, they'll essentially form a mobile swarm. ACO principles could optimize traffic flow across entire cities, with each vehicle's routing decisions depositing virtual pheromones that influence subsequent vehicles. Early simulations suggest this could reduce commute times by 30-40% compared to individual route optimization.

"Within a decade, swarm intelligence will be managing traffic flow, energy grids, and supply chains at scales that would overwhelm any centralized system."

- Harvard Wyss Institute Research Team

Climate change response may benefit from ant-inspired coordination. Managing complex environmental systems - water distribution during droughts, evacuation during disasters, resource allocation for adaptation projects - involves exactly the kind of distributed, dynamic optimization that ACO handles well. The European Union has funded research into using swarm intelligence for climate adaptation planning.

Step back from specific applications and something profound emerges. Ant colony optimization represents a fundamentally different approach to problem-solving than the methods that dominated 20th-century computing.

Traditional algorithms are top-down: a designer understands the problem, devises a solution strategy, and programs a computer to execute it. ACO is bottom-up: a designer creates simple rules for simple agents, and solutions emerge from their interactions. This shift mirrors broader changes in how we understand complex systems across many fields.

You see this in how companies organize. The old model was hierarchical command and control - executives make decisions, middle managers implement them, workers execute them. Modern organizations increasingly emphasize distributed decision-making, self-organizing teams, and emergent strategy. They're essentially becoming more like ant colonies and less like traditional bureaucracies.

The military has noticed. Swarm tactics for unmanned systems are replacing centralized command structures in some applications. A swarm of drones doesn't need constant communication with commanders to coordinate surveillance or respond to threats. Like ants, they follow simple rules that produce sophisticated collective behavior even when communication is limited or jammed.

Economic systems show similar patterns. Market economies are essentially large-scale swarm intelligence systems where individual decisions based on local information (prices, personal preferences, immediate needs) produce collective optimization of resource allocation. No central planner directs the economy, yet goods flow to where they're needed, production adjusts to demand, and innovation emerges from distributed experimentation. The parallels to ant colonies aren't perfect, but they're instructive.

As ant-inspired algorithms become more prevalent, what capabilities will professionals need to work with them effectively?

First, thinking in terms of collective behavior rather than individual intelligence becomes essential. Debugging an ACO system isn't like debugging traditional code where you trace through sequential logic. You need to understand how local rules produce global patterns, recognize when emergent behavior is desirable versus problematic, and tune parameters that affect collective dynamics rather than individual actions.

Second, interdisciplinary knowledge grows more valuable. The best ACO practitioners understand biology, computer science, mathematics, and the domain they're optimizing. You can't effectively apply swarm intelligence to urban traffic without understanding both the algorithms and how cities actually function. The most innovative applications come from people who can see parallels between ant behavior and problems in completely different fields.

Third, comfort with probabilistic rather than deterministic outcomes matters. Engineers trained on traditional systems expect predictable, repeatable results. ACO introduces randomness by design. Learning to evaluate probabilistic performance, tune systems for statistical optimization, and explain non-deterministic behavior to stakeholders requires a different mindset.

Data literacy becomes crucial because ant-inspired systems learn from experience. You need to recognize when training data is biased, identify what metrics actually matter for system performance, and understand how to set up environments where swarm systems can learn effectively. Bad data leads to bad emergent behavior just as surely as bad training produces poor performance in machine learning.

Where does this lead? The honest answer is we're still discovering what's possible.

Some researchers believe swarm intelligence will merge with artificial intelligence to create hybrid systems more capable than either approach alone. Imagine AI systems that don't just process information but explore solution spaces collectively, with different neural networks acting like different ant species, each specialized for different terrain, all contributing to colony-level intelligence.

Others see applications in fields we haven't imagined yet. The principles of stigmergy and emergent optimization might apply to social coordination problems - how communities make collective decisions, how information spreads through networks, how cultural practices evolve. Could we design social technologies that help human groups coordinate more effectively by learning from ant colonies?

The ethical dimensions deserve attention too. Swarm systems make decisions in ways that are harder to audit or explain than traditional algorithms. When an ACO system optimizes delivery routes in ways that inadvertently create environmental justice issues or discriminate against certain neighborhoods, how do we address that? The distributed nature that makes these systems powerful also makes them harder to regulate or correct.

Yet the fundamental insight remains compelling: simple rules, followed by simple agents, can solve extraordinarily complex problems through collective behavior. That insight, first discovered in forest floors and now embedded in code running data centers, continues to reshape how we approach optimization.

The ants figured this out 100 million years ago. We're only beginning to understand what they've been trying to teach us.

What problems might we solve next by asking: how would an ant colony handle this? The answer might be crawling right under our feet, waiting to be decoded into the next algorithmic revolution.

Sagittarius A*, the supermassive black hole at our galaxy's center, erupts in spectacular infrared flares up to 75 times brighter than normal. Scientists using JWST, EHT, and other observatories are revealing how magnetic reconnection and orbiting hot spots drive these dramatic events.

Segmented filamentous bacteria (SFB) colonize infant guts during weaning and train T-helper 17 immune cells, shaping lifelong disease resistance. Diet, antibiotics, and birth method affect this critical colonization window.

The repair economy is transforming sustainability by making products fixable instead of disposable. Right-to-repair legislation in the EU and US is forcing manufacturers to prioritize durability, while grassroots movements and innovative businesses prove repair can be profitable, reduce e-waste, and empower consumers.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

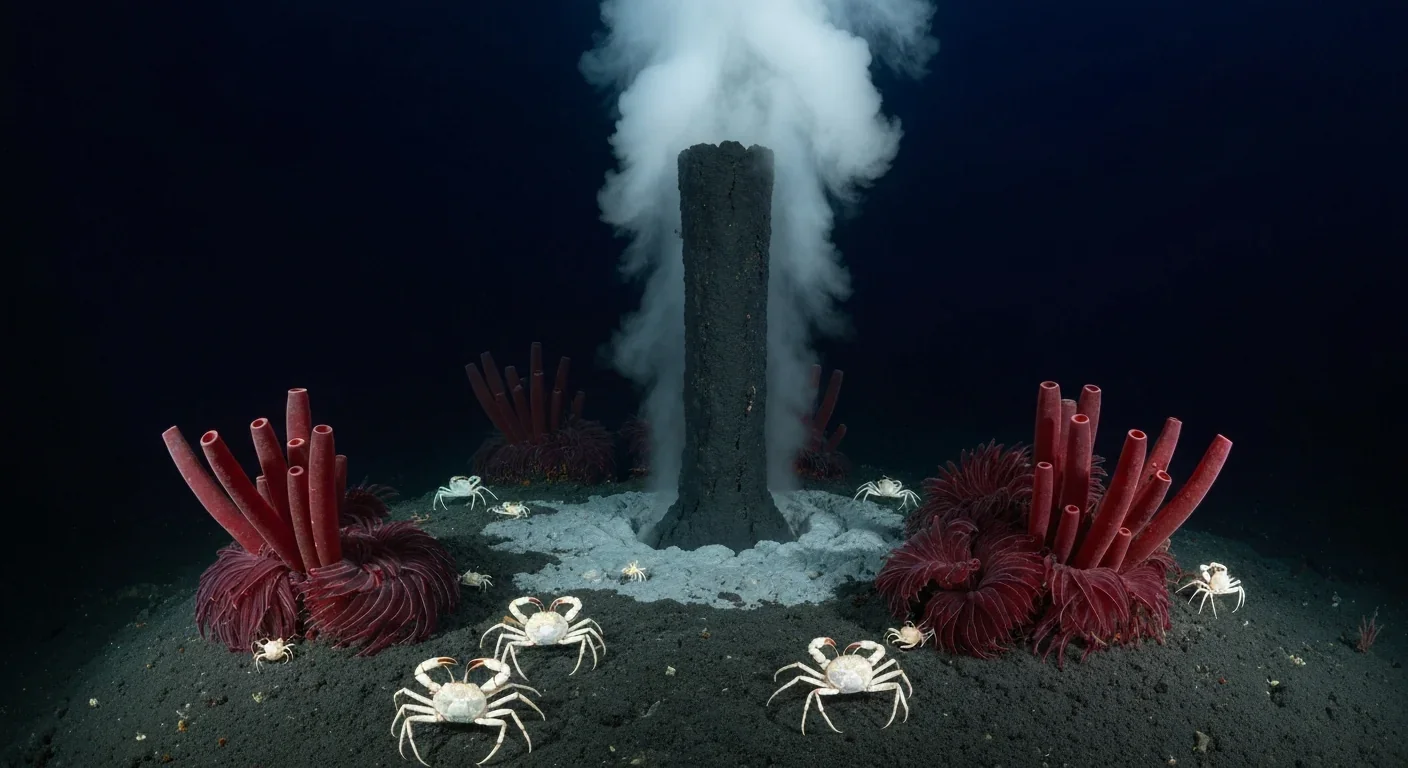

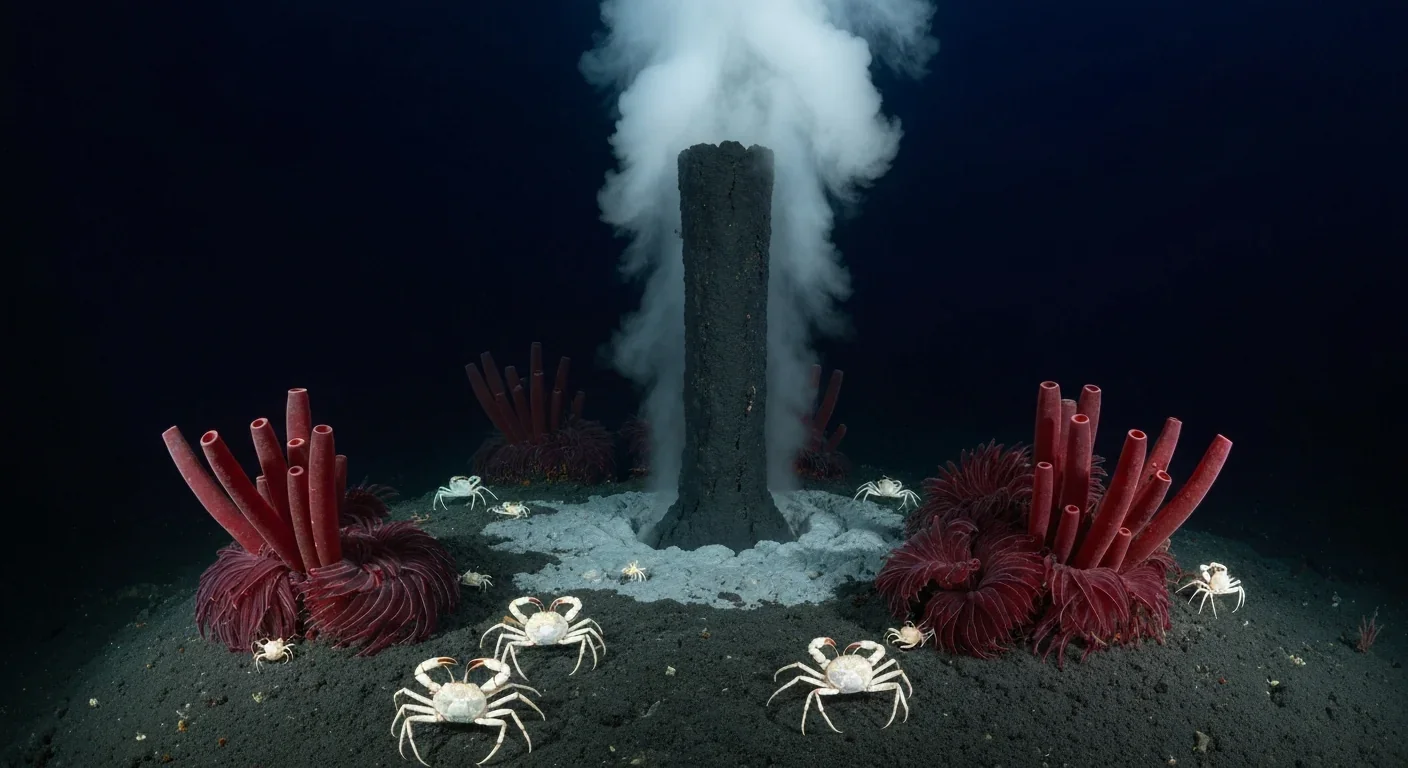

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Library socialism extends the public library model to tools, vehicles, and digital platforms through cooperatives and community ownership. Real-world examples like tool libraries, platform cooperatives, and community land trusts prove shared ownership can outperform both individual ownership and corporate platforms.

D-Wave's quantum annealing computers excel at optimization problems and are commercially deployed today, but can't perform universal quantum computation. IBM and Google's gate-based machines promise universal computing but remain too noisy for practical use. Both approaches serve different purposes, and understanding which architecture fits your problem is crucial for quantum strategy.